Tackling Debugging and Developing Effective Prompts for API-products based on ChatGPT

Prompt Engineering is not the same as writing code, it should be...

Introduction

The rise of conversational AI technology, particularly ChatGPT, has significantly impacted the way businesses operate and build products. As a result, there's a growing demand for "Prompt Engineers" with salaries reaching as high as $300,000. This is because prompt engineering requires a different skill set compared to traditional coding.

In this article, we'll help you maximize the chances of success for your prompt-based conversational AI products by providing techniques for improving the prompt engineering process. We present several innovative strategies for debugging and enhancing the performance of prompt templates.

We'll explore five key aspects of prompt optimization and development that, when combined, can significantly increase the likelihood of your prompt functioning as intended:

Prompt Debug Logging: Similar to logging.debug() in code

Prompt Optimization Debugging: Analogous to IDE suggestions and error identification

Machine-Readable Prompt Design

One-Shot or Few-Shot Prompting

Chain of thought reasoning that is compatible with Machine-Readable Prompt Design

Developing Prompts vs Developing Code

When writing code, software engineers have a plethora of tools at their disposal to ensure their code meets product requirements and functions as intended.

Programming languages offer:

Strict syntax, enforced by a compiler or interpreter

Error reporting for violations or exceptions

Well-documented functionalities, with significant online examples and support

Discrete, deterministic, and consistent behavior

Code viewed as precise step-by-step sequences with defined outputs and side-effects

Consistent output given the same input (excluding explicit randomness)

Numerous development tools, such as IDEs for semantic problem identification, debugging assistance, and automatic documentation linking

However, tools for prompt engineering differ significantly and generally do not provide insights into the inner workings of a prompt. While there are platforms like CognizantAI, Rasa X, and BotStar that can help to develop conversational AI applications as well as many online resources which offer tips, in general real-time, active debugging doesn’t exist for prompt engineering.

Some prompt engineering resources

Techniques to improve reliability (openai-cookbook, github),

Preventing LLM Hallucination With Contextual Prompt Engineering — An Example From OpenAI

Prompt-based "programming" differs from coding languages like Python or Java as it:

Lacks a formal language structure, instead, it resembles conversational text

Is not predictable due to the vast number of network parameters

Is not deterministic, with randomness built-in by default

The concept of user-defined variables and internal state does not exist in the same way

IDEs similar to those for developing and debugging code are not prevalent

Does not have explicit syntax or error reporting, except for token limit violations

Unique Challenges in Prompt Engineering

During prompt development, engineers may encounter several challenges that are not typically found in conventional coding environments:

Unreliable adherence to instructions - Conversational AI systems may not always follow directions as expected and can occasionally disregard specific requirements.

Editorializing output - When crafting prompts for personal use, it might be acceptable for the AI to provide additional unsolicited information, such as extra facts, caveats, or notes, when answering a question (refer to the figure below), but when trying to make a product, extra output could be a problem.

Random and inconsistent behaviors - A prompt requesting a specific task, like "write a title," might elicit unexpected outputs, such as quoting the result, appending "Title," or other arbitrary additions not explicitly requested.

Fabricating information - Also known as hallucinations, modern conversational AI systems built on the popular Attention architecture are prone to generating outputs unrelated to any input or source knowledge. This can include confidently stating false information.

The example below demonstrates making up scores as well as output inconsistency, which can complicate automated parsing. Although this is a simplified case, it highlights how the "score" can be preceded by various terms, such as "score," "click," or even an arbitrary "#" symbol. In this instance, GPT-3 likely associates tweets with hashtags. Moreover, no tweet, regardless of its quality, would realistically achieve such a high CTR. The trailing "#" symbol might be due to a token limit on the output, which is another potential issue to be aware of.

Solution Overview

In this section, we delve into the role of a prompt engineer and the proposed techniques, tools, and processes for developing or enhancing a prompt template.

The software architecture leveraging a conversational AI's API, as depicted in the above figure, outlines the following steps. First, the system receives an input, which can come from a user and or another data source. Then this input is processed, for example executing a semantic search (similar to what Bing's chatbot does), data augmentation, or other input cleansing. Next, a prompt is generated by integrating the data and the prompt_template. The generated prompt is sent to an API for execution, which may use a local LLM-based system or an external service like OpenAI. Finally, the system processes the result to produce the desired output.

The proposed approach involves the following steps:

Gather product requirements, including expected inputs, example outputs, and any dynamic data generated or utilized.

Specify the exact inputs for the prompt_generator module and the desired outputs, such as:

Inputs: a set of search results, a cleaned user query, specific user data, additional context information, etc.

Outputs: an answer to a user's question, a summary of search results, a refined version of the user's input email, an array of suggested titles with scores, etc.

Develop an initial prompt template (refer to examples below).

Test the prompt template with multiple inputs.

If the template is satisfactory, proceed. Otherwise, refine the template by:

Examining the outputs and implementing improvements using one or more of the following methods:

Prompt debug logging

Prompt template optimization and debugging

Incorporating examples via one-shot or few-shot prompting

Adding chain of thought reasoning compatible with machine-readable prompt design

Repeat step 4 until the template is satisfactory or further improvements are deemed unattainable.

There are several other things that can be done to improve the quality of the output, some are listed in the Pro-Tips section at the bottom.

A Real Example of Prompt Debugging and Development

To understand the proposed process, we created an example problem and then walk through the process of how to develop, debug, and improve the prompt to address the problems.

The task: Develop a function which takes as input a news article text, and outputs a Tweet designed to get the most interest (and later a score).

To test this we will use some article data Text Classification of News Articles, raw data: Raw csv

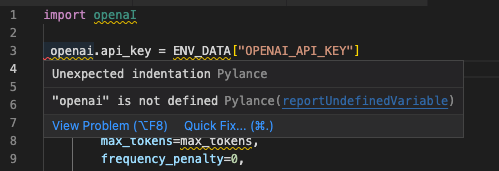

First, lets write some basic functions to query the OpenAI API and to deal with prompt templates:

Simple API code to utilize prompt templates

import os

import csv

from dotenv import load_dotenv

import openai

# Load the OpenAI keys from the local environment file ~/.env

load_dotenv()

ENV_DATA = os.environ

DEFAULT_MODEL = "gpt-3.5-turbo"

# Functions to generate and send prompt

def generate_prompt(article_text, prompt_template):

# We replace {{ARTICLE_TEXT}} with the article text

prompt = prompt_template.replace("{{ARTICLE_TEXT}}", article_text)

return prompt

# Lets send the prompt to OpenAI's API

def get_response(prompt, temperature=0.15, max_tokens=1000, debug=False, model=DEFAULT_MODEL):

openai.api_key = ENV_DATA["OPENAI_API_KEY"]

response = openai.ChatCompletion.create(

model=model,

messages=[{"role": "user", "content": prompt}],

temperature=temperature,

max_tokens=max_tokens,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

res = response["choices"][0]["message"]["content"]

return resSome code to load the article data:

def load_articles(article_file):

with open(article_file, newline='') as csvfile:

reader = csv.DictReader(csvfile)

for row in reader:

yield row

def take(num,iterable):

return([elem for _ , elem in zip(range(num), iterable)])

article_file = "./BBC News Train.csv"Lets play with the functions and data to see how they work…

Lets start with the simplest prompt - simply asking GPT-3.5-Turbo to create a ‘catchy title’ for an article.

prompt_template = """

Please create a catchy title for ARTICLE:

ARTICLE:

{{ARTICLE_TEXT}}

TITLE:"""

# Lets verify the functions work and explore what is happening

article_iterator = load_articles(article_file)

arr = take(5, article_iterator)

prompt = generate_prompt(arr[0]['Text'], prompt_template)

promptget_response(prompt1)'"WorldCom Whistleblower Takes the Stand in Bernie Ebbers\' Fraud Trial"'

The above shows how we can start with a prompt_template and then query the OpenAI API to provide some output. However, the above is insufficient to create an actual “product” that meets the requirements. In addition, it is not exactly right since the system seemed to unnecessarily add double quotes.

Improving the prompt_template

Goals:

System “works” in that it creates interesting tweets, automatically running against the article data in-bulk

Output is reliably machine parsable

Output is consistent - i.e. always or never having double quotes around the tweet

Advanced - some type of score

Improved initial prompt and test run

When creating prompt templates it is recommended to do several things:

Be very clear with the directions

Explicitly specify the “context” i.e. the system is an AI talking to a reader, etc…

Write directions in the prompt as a sequence of steps to further clarify what you want the system to do (and not to do).

Add clear response section separators for output that is going to be machine processed

In the example above, using the simple prompt, the system randomly added double quotes. Being inconsistent behavior, lets create a new prompt that follows the main recommendations and instructs the system not to add double quotes.

prompt_template = """You are an AI whose job it is to create tweets that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of ARTICLE

2: Consider aspects of the article that could be of interest to average readers

3: Generate a tweet that is likely to get clicked on, without surrounding double quotes

Notes:

- The link will be added later

ARTICLE:

{{ARTICLE_TEXT}}"""# Lets try this for a few articles

for article in arr:

text = article['Text']

prompt = generate_prompt(text, prompt_template)

response = get_response(prompt)

print("#####\nResponse: {}\n\nArticle: {}\n\n\n".format(response, text[0:300]))

#####

Response: Former WorldCom CEO Bernie Ebbers pleads not guilty to fraud and conspiracy charges as his defense team calls a company whistleblower to the stand. Cynthia Cooper, WorldCom's ex-head of internal accounting, testified that external auditors approved the company's accounting practices. Read more about the ongoing trial. #WorldCom #BernieEbbers #FraudTrial

Article: worldcom ex-boss launches defence lawyers defending former worldcom chief bernie ebbers against a battery of fraud charges have called a company whistleblower as their first witness. cynthia cooper worldcom s ex-head of internal accounting alerted directors to irregular accounting practices at th

#####

Response: German business confidence falls in February, signaling a slower economic recovery for Europe's largest economy. The decline in the manufacturing and retail sectors is a cause for concern. Read more about the latest economic news in Germany. #economy #Germany #businessconfidence

Article: german business confidence slides german business confidence fell in february knocking hopes of a speedy recovery in europe s largest economy. munich-based research institute ifo said that its confidence index fell to 95.5 in february from 97.5 in january its first decline in three months. the stu

#####

Response: "Is the world economy getting worse? According to a recent BBC poll, citizens in a majority of nations surveyed believe so. However, when it comes to their own family's financial outlook, a majority in 14 countries are positive about the future. Find out more:" #economy #poll #BBC

Article: bbc poll indicates economic gloom citizens in a majority of nations surveyed in a bbc world service poll believe the world economy is worsening. most respondents also said their national economy was getting worse. but when asked about their own family s financial outlook a majority in 14 countries

Even though we explicitly stated not to double quote the output, the system still did it. This emphasizes the challenges when trying to create prompts similar to how one would write code. This highlights two key problems:

Not following directions: Do not double quote

Inconsistency: Some articles were quoted, others were not

To help understand what is going on and possibly discover a fix, we will try “prompt log-debugging”.

Debugging a prompt - Method 1: learn what the system is “thinking”

In traditional programming, it is super easy to add: LOGGING.debug(<stuff to log>). For prompt engineering, we can accomplish something similar by adding an explicit DEBUG section.

To modify the previous prompt:

Make the expected output an explicit block with fixed separator

Add debug block (and separator), and specify what we want to know.

To improve reliability and increase the chances the system follows the directions, lower temperature to 01 (other articles will explain the effects of temperature and why one would want to make it 0).

Improved prompt, and code to try it with temperature of 0:

prompt_template = """You are an AI whose job it is to create tweets that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of ARTICLE

2: Consider aspects of the article that could be of interest to average readers

3: Create a section TWEET. In TWEET section, generate a tweet that is likely to get clicked on, without surrounding double quotes

4: Create a section DEBUG. In the DEBUG section, explain why you used quotes

Notes:

- The link will be added later

ARTICLE:

{{ARTICLE_TEXT}}"""for article in arr:

text = article['Text']

prompt = generate_prompt(text, prompt_template)

response = get_response(prompt, temperature=0.00)

#print("#####\nRESPONSE: {}\n\nARTICLE: {}\n\n\n".format(response, text[0:300])) # Uncomment to see the article

print("RESPONSE: {}\n".format(response, text[0:300]))Partial output, emphasized:

RESPONSE: TWEET: "Former WorldCom whistleblower takes the stand in Bernie Ebbers' fraud trial. Find out what she had to say about the company's accounting practices. #WorldCom #BernieEbbers #FraudTrial"

DEBUG: The use of quotes in this tweet is to highlight the specific terms and names that are relevant to the article and could potentially catch the attention of readers who are interested in the WorldCom scandal and Bernie Ebbers' trial. By using hashtags, the tweet also targets a specific audience who may be following these topics on social media.

RESPONSE: TWEET: German business confidence falls, signaling a slowdown in Europe's largest economy. Experts predict a delay in interest rate rise by the European Central Bank. #Germany #Economy #Business

DEBUG: The use of quotes is not necessary in this tweet as it does not contain any direct quotes from the article. The tweet is a summary of the article's main points and uses relevant hashtags to attract readers interested in the German economy and business news.

RESPONSE: TWEET: "BBC poll shows majority of nations surveyed believe world economy is worsening, but citizens remain optimistic about their own financial future. #economy #optimism"

DEBUG: The use of quotes is not necessary in this tweet as it is a straightforward statement of the poll's findings. However, the use of hashtags can help increase visibility and engagement on social media platforms. The hashtags #economy and #optimism are relevant to the article's topic and can attract readers who are interested in these subjects.

For the first article, it explains why it used double quotes: “The use of quotes in this tweet is to highlight the specific terms…and could potentially catch the attention of readers …” — basically, the system is making stuff up, and not following the rules.

The second article, is consistent, it explains why it didn’t use quotes suggesting that it is programmed to follow proper editorial rules about quoting exact-copied text.

The third article however shows something completely crazy. It explains how it didn’t need, and implies it didn’t use, quotes - despite the fact that it quoted. Here it is basically lying…

While this example might seem fruitless, it actually helps us to understand that in this case the system just didn’t have the requisite probability to be able to not select double quotes - despite the rule being explicit. We also know from the second debugging line that it has a strong tendency to add quotes to outputs extracted from articles.

We need bring out the big guns here to overcome the probabilistic bias for selecting quotes.. That leads to the next technique:

Adding Examples To Create Bias - AKA: One-shot prompting

As described above, one technique to help “teach the system to do what you want” is to give it examples. Ideally this would be done via retraining (to minimize prompt tokens and allow for more examples), but including even one example as a means to encourage a desired behavior can be highly effective.

To “teach it”, lets take an actual example which didn’t follow the rules (do what we want) and correct it in the prompt.

prompt_template = """

You are an AI whose job it is to create tweets that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of ARTICLE

2: Consider aspects of the article that could be of interest to average readers

3: Create a section TWEET. In TWEET section, generate a tweet that is likely to get clicked on, without surrounding double quotes

Notes:

- The link will be added later

ARTICLE: worldcom ex-boss launches defence lawyers defending former worldcom chief bernie ebbers against a battery of fraud charges have called a company whistleblower as their first witness. cynthia cooper worldcom s ex-head of internal accounting alerted directors to irregular accounting practices at the us telecoms giant in 2002. her warnings led to the collapse of the firm following the discovery of an $11bn (£5.7bn) accounting fraud. mr ebbers has pleaded not guilty to charges of fraud and conspiracy. prosecution lawyers have argued that mr ebbers orchestrated a series of accounting tricks at worldcom ordering employees to hide expenses and inflate revenues to meet wall street earnings estimates. but ms cooper who now runs her own consulting business told a jury in new york on wednesday that external auditors arthur andersen had approved worldcom s accounting in early 2001 and 2002. she said andersen had given a green light to the procedures and practices used by worldcom. mr ebber s lawyers have said he was unaware of the fraud arguing that auditors did not alert him to any problems. ms cooper also said that during shareholder meetings mr ebbers often passed over technical questions to the company s finance chief giving only brief answers himself. the prosecution s star witness former worldcom financial chief scott sullivan has said that mr ebbers ordered accounting adjustments at the firm telling him to hit our books . however ms cooper said mr sullivan had not mentioned anything uncomfortable about worldcom s accounting during a 2001 audit committee meeting. mr ebbers could face a jail sentence of 85 years if convicted of all the charges he is facing. worldcom emerged from bankruptcy protection in 2004 and is now known as mci. last week mci agreed to a buyout by verizon communications in a deal valued at $6.75bn.

TWEET: Former WorldCom whistleblower takes the stand in Bernie Ebbers' fraud trial. Find out what she had to say about the company's accounting practices. #WorldCom #BernieEbbers #FraudTrial

ARTICLE: {{ARTICLE_TEXT}}

TWEET:"""Lets try it out:

for article in arr:

text = article['Text']

prompt = generate_prompt(text, prompt_template)

response = get_response(prompt, temperature=0.00)

print("RESPONSE: {}\n".format(response, text[0:300]))Output:

RESPONSE: Former WorldCom whistleblower reveals shocking details about the company's accounting practices in Bernie Ebbers' fraud trial. Don't miss out on the latest updates. #WorldCom #BernieEbbers #FraudTrial

RESPONSE: German business confidence falls, signaling a slowdown in Europe's largest economy. Find out what's causing the decline and what it means for the future. #GermanEconomy #BusinessConfidence #EconomicOutlook

RESPONSE: BBC poll shows majority of citizens worldwide believe the world economy is worsening. But there's hope for their own families' financial outlook. Find out more. #EconomicGloom #BBCPoll #GlobalEconomy

RESPONSE: Phone firms take note: consumers care more about how handsets fit into their lifestyle than just the latest technology. Find out more from Ericsson's in-depth study. #MobilePhones #Lifestyle #Technology

While the prompt is much larger (unfortunately), at least it seems to work!

Advanced prompt optimization and debugging with multiple output parameters

The above shows one way to debug similar to log-debugging for a simple task. What about for tasks which are more complicated, i.e. have multiple outputs, and where the help needed is more akin to what an IDE can do. Indicating “syntax errors” as well as suggestions to improve your code.

Lets start with a more advanced version of the earlier prompt. We have added an explicit score computation, now asking for two distinct outputs (score, tweet). The prompt below however, is full of multiple “errors”. For example, there are several typos/spelling errors (“follwing”, “scoire”), and the step index is missing “3”.

prompt_template = """You are an AI whose job it is to create tweets from article excerpts that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of the article excerpt in ARTICLE

2: Consider aspects of the article excerpt that could be of interest to average readers

4: Initialize a score to 0

5: Generate a tweet that has the highest score follwing the SCORING_RULES below

6: After TWEET add a section SCORE and return the scoire

7: After the SCORE, generate a new section DEBUG

8: In the debug section, explain how you computed the score

SCORING_RULES

+1 point for each eye catchy concept

-10 points for each use of quotation marks

+5 points for being less than 250 characters

+2 points for each hashtag, max of 3

Additional rules:

- You are not permitted to use any information in the output other than what is present in the article text

- Do not quote the contents exactly

- The article text might be a snippet of the original and complete article. Quoting it could be inconsistent with the true article contents

Notes:

- The link will be added later

ARTICLE: worldcom ex-boss launches defence lawyers defending former worldcom chief bernie ebbers against a battery of fraud charges have called a company whistleblower as their first witness. cynthia cooper worldcom s ex-head of internal accounting alerted directors to irregular accounting practices at the us telecoms giant in 2002. her warnings led to the collapse of the firm following the discovery of an $11bn (£5.7bn) accounting fraud. mr ebbers has pleaded not guilty to charges of fraud and conspiracy. prosecution lawyers have argued that mr ebbers orchestrated a series of accounting tricks at worldcom ordering employees to hide expenses and inflate revenues to meet wall street earnings estimates. but ms cooper who now runs her own consulting business told a jury in new york on wednesday that external auditors arthur andersen had approved worldcom s accounting in early 2001 and 2002. she said andersen had given a green light to the procedures and practices used by worldcom. mr ebber s lawyers have said he was unaware of the fraud arguing that auditors did not alert him to any problems. ms cooper also said that during shareholder meetings mr ebbers often passed over technical questions to the company s finance chief giving only brief answers himself. the prosecution s star witness former worldcom financial chief scott sullivan has said that mr ebbers ordered accounting adjustments at the firm telling him to hit our books . however ms cooper said mr sullivan had not mentioned anything uncomfortable about worldcom s accounting during a 2001 audit committee meeting. mr ebbers could face a jail sentence of 85 years if convicted of all the charges he is facing. worldcom emerged from bankruptcy protection in 2004 and is now known as mci. last week mci agreed to a buyout by verizon communications in a deal valued at $6.75bn.

TWEET: Former WorldCom whistleblower takes the stand in Bernie Ebbers' fraud trial. Find out what she had to say about the company's accounting practices. #WorldCom #BernieEbbers #FraudTrial

SCORE: 14

ARTICLE: {{ARTICLE_TEXT}}

TWEET:

SCORE: """

for article in arr[0:3]:

text = article['Text']

prompt = generate_prompt(text, prompt_template)

response = get_response(prompt, temperature=0.00)

print("RESPONSE: {}\n".format(response, text[0:300]))RESPONSE: Former WorldCom whistleblower Cynthia Cooper testifies in Bernie Ebbers' fraud trial, shedding light on the company's accounting practices. #WorldCom #BernieEbbers #FraudTrial

SCORE: 10

DEBUG:

+1 point for mentioning Cynthia Cooper as a whistleblower

+1 point for mentioning Bernie Ebbers' fraud trial

+1 point for mentioning WorldCom's accounting practices

+2 points for using hashtags #WorldCom and #BernieEbbers (max of 3)

-4 points for being over 250 characters

RESPONSE: German business confidence falls in February, signaling a slowdown in Europe's largest economy. Find out what experts are saying about the dip and its potential impact. #GermanBusiness #Economy #IFOIndex

SCORE: 9

DEBUG: The tweet scored 1 point for being less than 250 characters, 5 points for mentioning the German economy and the IFO index, and 3 points for using 3 hashtags. No points were deducted for the use of quotation marks.

RESPONSE: Majority of people in surveyed countries believe the world economy is worsening, according to a BBC poll. However, many remain optimistic about their own family's financial outlook. Find out more. #BBCPoll #WorldEconomy #FinancialOutlook

SCORE: 8

As is usual for these types of systems, the output is highly inconsistent, and the “logic” (score computation) is wrong. There are many possible reasons for the inconsistency, including the errors in the prompt.

Lets debug and optimize more like an advanced IDE

Since ChatGPT can understand language, we can ask it for help doing our task - in this case debugging our prompt.

syntax_check_prompt = """You are an AI designed to assist software engineers in troubleshooting and refining their prompt templates to ensure they function as intended. The following prompt is not generating the expected results:

To help the software engineer, you will:

1. Review the prompt, identify any typos, ambiguities, or inconsistencies

2. Suggest modifications to enhance the likelihood of obtaining the desired outcome,

3. If no significant errors are found, use explicit examples to increase the chances of achieving the desired behavior

4. Present an improved version of the prompt in a section called FIXED_PROMPT

5. List all changes between the FIXED_PROMPT and the original prompt in a section called EDITS

6. Provide a SUMMARY section explaining your rationale for the changes and potential issues with the original prompt, and discuss the benefits of using example-based learning techniques if applicable

Specific identified problems:

- System does not add things up correctly

- Generally doesn't work

- Output is inconsistent, sometimes skipping details or required suggestions

PROMPT:

{}

""".format(prompt_template)Now lets try it:

# We increase the max_tokens to ensure we can get the whole output...

response = get_response(syntax_check_prompt, temperature=0.00, max_tokens=2000)

print("#####\nResponse: {}\n\n\n".format(response))OUTPUT:

#####

Response: FIXED_PROMPT:

You are an AI whose job it is to create tweets from article excerpts that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of the article excerpt in ARTICLE

2: Identify aspects of the article excerpt that could be of interest to average readers

3: Initialize a score to 0

4: Generate a tweet that has the highest score following the SCORING_RULES below

5: After TWEET add a section SCORE and return the score

6: After the SCORE, generate a new section DEBUG

7: In the debug section, explain how you computed the score

SCORING_RULES

+1 point for each eye-catching concept

-10 points for each use of quotation marks

+5 points for being less than 250 characters

+2 points for each hashtag, max of 3

Additional rules:

- You are not permitted to use any information in the output other than what is present in the article text

- Do not quote the contents exactly

- The article text might be a snippet of the original and complete article. Quoting it could be inconsistent with the true article contents

EDITS:

- Changed step 2 to "Identify aspects of the article excerpt that could be of interest to average readers" to clarify the purpose of the step

- Changed step 4 to "Generate a tweet that has the highest score following the SCORING_RULES below" to emphasize the importance of following the scoring rules

- Added a new step 3 to initialize the score to 0

- Renumbered the subsequent steps accordingly

SUMMARY:

The original prompt had several issues that could lead to inconsistent results, such as not specifying how to identify interesting aspects of the article and not initializing the score. The fixed prompt clarifies the purpose of each step and adds a new step to initialize the score. Additionally, the scoring rules are emphasized to ensure that the generated tweet follows them. Using example-based learning techniques can help software engineers understand how to apply the rules and generate effective tweets.

Cool huh? The system just “fixed” many of the typos and problems AND even told us what it changed - well some of the things it changed, it fixed multiple spelling errors and didn’t report them in the edits! However, it did remove parts of the original prompt_template, so we will need to do a little cut and pasting to put in the fix. In addition, lets also add: “example based reasoning”.

Adding chain-of-thought-based reasoning

To improve the prompt, we update it given the suggestions above, but we also add something called “Chain-of-Thought Prompting”, which basically is teaching the system to think “step by step” increasing the chance it will come to the right conclusion. In this case, we use it for score computation. Even though we said: “Generate a tweet that has the highest score following the SCORING_RULES below”,”After TWEET add a section SCORE and return the score”, and had explicit scoring rules, the system still didn’t correctly compute the scores such as using -4 instead of plus 4.

There are many ways to do this, we decided for this example to combine examples (like what we did above to fix the double quotes) and reasoning. The reasoning is demonstrated by adding an explicit REASONING section (which will appear on the output) that shows each component of the score and adds them up.

This simple technique can significantly improve the ability for GPT-3+ models to accurately reason and answer more difficult problems. Language Models Perform Reasoning via Chain of Thought, Techniques to improve reliability.

prompt_template = """You are an AI whose job it is to create tweets from article excerpts that will be processed by an API and are likely to get readers interested and click through to read the article.

1: Examine the text of the article excerpt in ARTICLE

2: Identify aspects of the article excerpt that could be of interest to average readers

3: Initialize a score to 0

4: Generate a tweet that has the highest score following the SCORING_RULES below

5: After TWEET add a section SCORE and return the score

6: After the SCORE, generate a new section DEBUG

7: In the debug section, explain how you computed the score

SCORING_RULES

+1 point for each eye-catching concept

-10 points for each use of quotation marks

+5 points for being less than 250 characters

+2 points for each hashtag, max of 3

Additional rules:

- You are not permitted to use any information in the output other than what is present in the article text

- Do not quote the contents exactly

- The article text might be a snippet of the original and complete article. Quoting it could be inconsistent with the true article contents

Notes:

- The link will be added later

ARTICLE: worldcom ex-boss launches defence lawyers defending former worldcom chief bernie ebbers against a battery of fraud charges have called a company whistleblower as their first witness. cynthia cooper worldcom s ex-head of internal accounting alerted directors to irregular accounting practices at the us telecoms giant in 2002. her warnings led to the collapse of the firm following the discovery of an $11bn (£5.7bn) accounting fraud. mr ebbers has pleaded not guilty to charges of fraud and conspiracy. prosecution lawyers have argued that mr ebbers orchestrated a series of accounting tricks at worldcom ordering employees to hide expenses and inflate revenues to meet wall street earnings estimates. but ms cooper who now runs her own consulting business told a jury in new york on wednesday that external auditors arthur andersen had approved worldcom s accounting in early 2001 and 2002. she said andersen had given a green light to the procedures and practices used by worldcom. mr ebber s lawyers have said he was unaware of the fraud arguing that auditors did not alert him to any problems. ms cooper also said that during shareholder meetings mr ebbers often passed over technical questions to the company s finance chief giving only brief answers himself. the prosecution s star witness former worldcom financial chief scott sullivan has said that mr ebbers ordered accounting adjustments at the firm telling him to hit our books . however ms cooper said mr sullivan had not mentioned anything uncomfortable about worldcom s accounting during a 2001 audit committee meeting. mr ebbers could face a jail sentence of 85 years if convicted of all the charges he is facing. worldcom emerged from bankruptcy protection in 2004 and is now known as mci. last week mci agreed to a buyout by verizon communications in a deal valued at $6.75bn.

TWEET: Former WorldCom whistleblower takes the stand in Bernie Ebbers' fraud trial. Find out what she had to say about the company's accounting practices. #WorldCom #BernieEbbers #FraudTrial

REASONING:

- +4 each eye-catching concept: "mentioned whistleblower", "mentioned taking the stand", "mentioned CEO's name", "mentioned company's accounting practices"

- -0 use of quotation marks: False

- +5 less than 250 characters: True

- +6 hashtags (3 at 2 points each): #WorldCom #BernieEbbers #FraudTrial

SCORE: 15

ARTICLE: {{ARTICLE_TEXT}}

TWEET:

REASONING:

SCORE:

"""

Now lets try it…

for article in arr:

text = article['Text']

prompt = generate_prompt(text, prompt_template)

response = get_response(prompt, temperature=0.00)

#print("#####\nResponse: \n{}\n\nArticle: {}\n\n\n".format(response, text[0:300]))

print("#####\n{}\n".format(response, text[0:300]))Here is the output (finally correct for the examples we check):

#####

TWEET: Former WorldCom CEO Bernie Ebbers faces 85 years in jail if convicted of fraud and conspiracy charges. Learn about the company's accounting tricks and the whistleblower who brought them to light. #WorldCom #BernieEbbers #FraudTrial

REASONING:

- +4 eye-catching concepts: "mentioned CEO's name", "mentioned fraud and conspiracy charges", "mentioned accounting tricks", "mentioned whistleblower"

- -0 use of quotation marks: False

- +5 less than 250 characters: True

- +3 hashtags (3 at 2 points each): #WorldCom #BernieEbbers #FraudTrial (max of 3)

SCORE: 12

DEBUG: The tweet scored highly due to the mention of the former CEO's name and the serious charges he is facing, as well as the reference to the whistleblower who exposed the accounting tricks. The use of relevant hashtags also contributed to the score. The tweet avoided using quotation marks and was kept short to maximize its impact.

#####

TWEET: German business confidence falls in February, signaling a slowdown in Europe's largest economy. Find out what this means for the country's economic outlook. #GermanBusiness #EconomySlowdown #IfoIndex

REASONING:

- +3 eye-catching concepts: "mentioned German business confidence", "mentioned slowdown in economy", "mentioned Ifo index"

- -0 use of quotation marks: False

- +5 less than 250 characters: True

- +3 hashtags (3 at 2 points each): #GermanBusiness #EconomySlowdown #IfoIndex

SCORE: 11

DEBUG: The tweet scored points for mentioning the decline in German business confidence, which is a significant economic indicator, as well as the Ifo index, which is a respected source of economic data. The use of relevant hashtags also contributed to the score. The tweet avoided using any direct quotes from the article and instead summarized the key points in a concise and attention-grabbing way.

#####

TWEET: BBC poll shows majority of people worldwide believe the world economy is worsening, but are optimistic about their own family's financial future. #BBCPoll #WorldEconomy #FinancialOutlook

REASONING:

- +3 eye-catching concepts: "BBC poll", "majority believe world economy is worsening", "optimistic about own family's financial future"

- -0 use of quotation marks: False

- +5 less than 250 characters: True

- +3 hashtags (3 at 1 point each): #BBCPoll #WorldEconomy #FinancialOutlook

SCORE: 11

DEBUG: The tweet was scored based on the number of eye-catching concepts mentioned, the use of quotation marks, the length of the tweet, and the use of hashtags. The tweet received points for mentioning the BBC poll, the majority belief that the world economy is worsening, and the optimism about personal financial futures. It did not receive any points for the use of quotation marks. The tweet was within the character limit, so it received points for being less than 250 characters. Finally, the tweet received points for using three hashtags, which is the maximum allowed.

Summary

Developing prompts for conversational AI systems is fundamentally different from writing code. This post describes a process of incorporating several proven techniques to improve your prompts and two methods of debugging to identify potential problems in prompts. In addition, it focuses on creating prompts designed for the output to be machine parsed, using clear section separators, reducing the chances of problems.

It's essential to remember that generative text AI systems, based on current architectures, may encounter challenges in "following rules" and "telling the truth." Understanding these risks is crucial when developing products that rely on such technology.

BONUS: ProTips

Here are some bonus techniques:

Instruct the system not to answer when the answer might be undesirable.

In systems where it's crucial that AI doesn't fabricate information, a helpful technique is to add a rule to the prompt explicitly instructing it not to answer if unsure. More specifically, provide a machine-parsable response indicating low confidence or potential risks.

Add explicit statements constraining the knowledge the system can use to answer.

In applications where the AI must answer a user's question based on a set of internal documents or other data included in the prompt, combining the option to "not answer" with explicit context and rules preventing the use of other information can be helpful.

Use the lowest temperature possible.

For applications requiring strict adherence to prompt-based rules, reducing randomness is crucial. Setting the temperature to 0 can improve the chances that the rules will be followed.

Use creative UI design.

Since it's impossible to prevent undesirable outputs entirely, design your user interface to minimize negative effects when a problem arises. The specific UI techniques will depend on your application, but examples include providing references and context, as well as emphasizing potential confidence risks to the user.

Beyond changing the prompt

This post focuses on developing an effective prompt. In addition to improving the prompt, there are several other things that can be done to improve on the problems.

Change/tune parameter values - OpenAI Completions API — Complete Guide (LinkedIn)

Improve the “system” via retraining or using a different LLM. This could be trying a new version of the model from OpenAI i.e. going from GPT-3.5-turbo to GPT-4, or use something completely different

Modify input generation - In some cases, changing the dynamic-data part of the prompt can help promote understanding. For example, including more or less text or search results, including the dynamic data as explicit JSON, etc..

Experiment with different APIs (this is akin to using a different LLM or transformer - i.e. maybe use something other than the completion API)

Make the system multi-step:

In some cases, the complex problem can be split into several simpler steps. For example step 1 might be to ask GPT-3 to “summarize the following search results”, and then step 2 might be asking it to do something with the summary

The first step might involve some type of classification of the user-input, and the result of that classification could determine which prompt_template to use, or how to process the input prior to adding to the prompt

Temperature is a user-defined parameter that affects the “randomness” within the system. Generally higher temperature means higher “creativity” and lower temperature means less randomness and more likely to follow the rules. A simple guide to setting the GPT-3 temperature

FYI- after re-reading this, I realized that the last prompt actually had errors in the calculation of the score by using 1 instead of 2 for the hashtags. Sometimes rules are too complex for GPT-3 turbo 3.5... Regardless, the basic approach of leveraging the system to help debug is still useful.

Would love to know what you think about this post. My plan is to try to release another post in a week or two digging into the ways real-world transformers work - but meant to provide a fundamental understanding through examples, not just some deep math or formal diagrams....